Can deep learning reconstruct holograms and improve optical microscopy?

A form of machine learning called deep learning is one of the key technologies behind recent advances in applications like real-time speech recognition and automated image and video labeling.

The approach, which uses multi-layered artificial neural networks to automate data analysis, also has shown significant promise for health care: It could be used, for example, to automatically identify abnormalities in patients’ X-rays, CT scans and other medical images and data.

In two new papers, UCLA researchers report that they have developed new uses for deep learning: reconstructing a hologram to form a microscopic image of an object and improving optical microscopy.

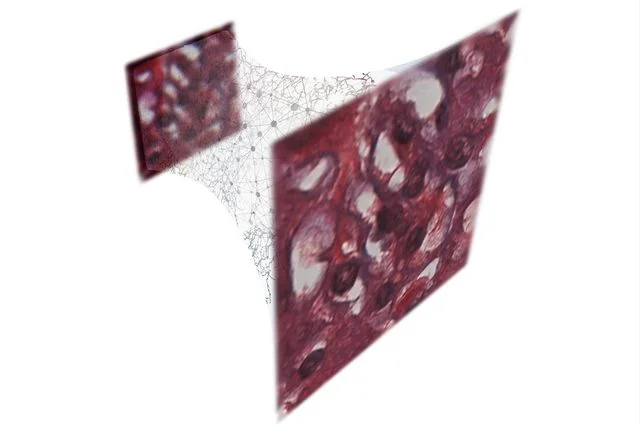

Their new holographic imaging technique produces better images than current methods that use multiple holograms, and it’s easier to implement because it requires fewer measurements and performs computations faster.

Credit: Ozcan Research Group/UCLA

The technique developed at UCLA uses deep learning to produce high-resolution pictures from lower-resolution microscopic images.

The research was led by Aydogan Ozcan, an associate director of the UCLA California NanoSystems Institute and the Chancellor’s Professor of Electrical and Computer Engineering at the UCLA Henry Samueli School of Engineering and Applied Science; and by postdoctoral scholar Yair Rivenson and graduate student Yibo Zhang, both of UCLA’s electrical and computer engineering department.

For one study (PDF), published in Light: Science and Applications, the researchers produced holograms of Pap smears, which are used to screen for cervical cancer, and blood samples, as well as breast tissue samples. In each case, the neural network learned to extract and separate the features of the true image of the object from undesired light interference and from other physical byproducts of the image reconstruction process.

“These results are broadly applicable to any phase recovery and holographic imaging problem, and this deep-learning–based framework opens up myriad opportunities to design fundamentally new coherent imaging systems, spanning different parts of the electromagnetic spectrum, including visible wavelengths and even X-rays,” said Ozcan, who also is an HHMI Professor at the Howard Hughes Medical Institute.

Another advantage of the new approach was that it was achieved without any modeling of light–matter interaction or a solution of the wave equation, which can be challenging and time-consuming to model and calculate for each individual sample and form of light.

“This is an exciting achievement since traditional physics-based hologram reconstruction methods have been replaced by a deep-learning–based computational approach,” Rivenson said.

Other members of the team were UCLA researchers Harun Günaydin and Da Teng, both members of Ozcan’s lab.

The second study, published in the journal Optica, the researchers used the same deep-learning framework to improve the resolution and quality of optical microscopic images.

That advance could help diagnosticians or pathologists looking for very small-scale abnormalities in a large blood or tissue sample, and Ozcan said it represents the powerful opportunities for deep learning to improve optical microscopy for medical diagnostics and other fields in engineering and the sciences.

Ozcan’s research is supported by the National Science Foundation–funded Precise Advanced Technologies and Health Systems for Underserved Populations and by the NSF, as well as the Army Research Office, the National Institutes of Health, the Howard Hughes Medical Institute, the Vodafone Americas Foundation and the Mary Kay Foundation.